A new class action lawsuit reframes HR tech risk around privacy and consumer reporting laws like FCRA and California’s ICRAA.

On January 20, 2026, a class action lawsuit was filed against Eightfold AI (Kistler v. Eightfold AI) in the Northern District of California. This filing represents a significant shift in the legal scrutiny of HR technology. Unlike recent cases focused on algorithmic bias (Mobley v. Workday), this case centers on privacy and consumer reporting.

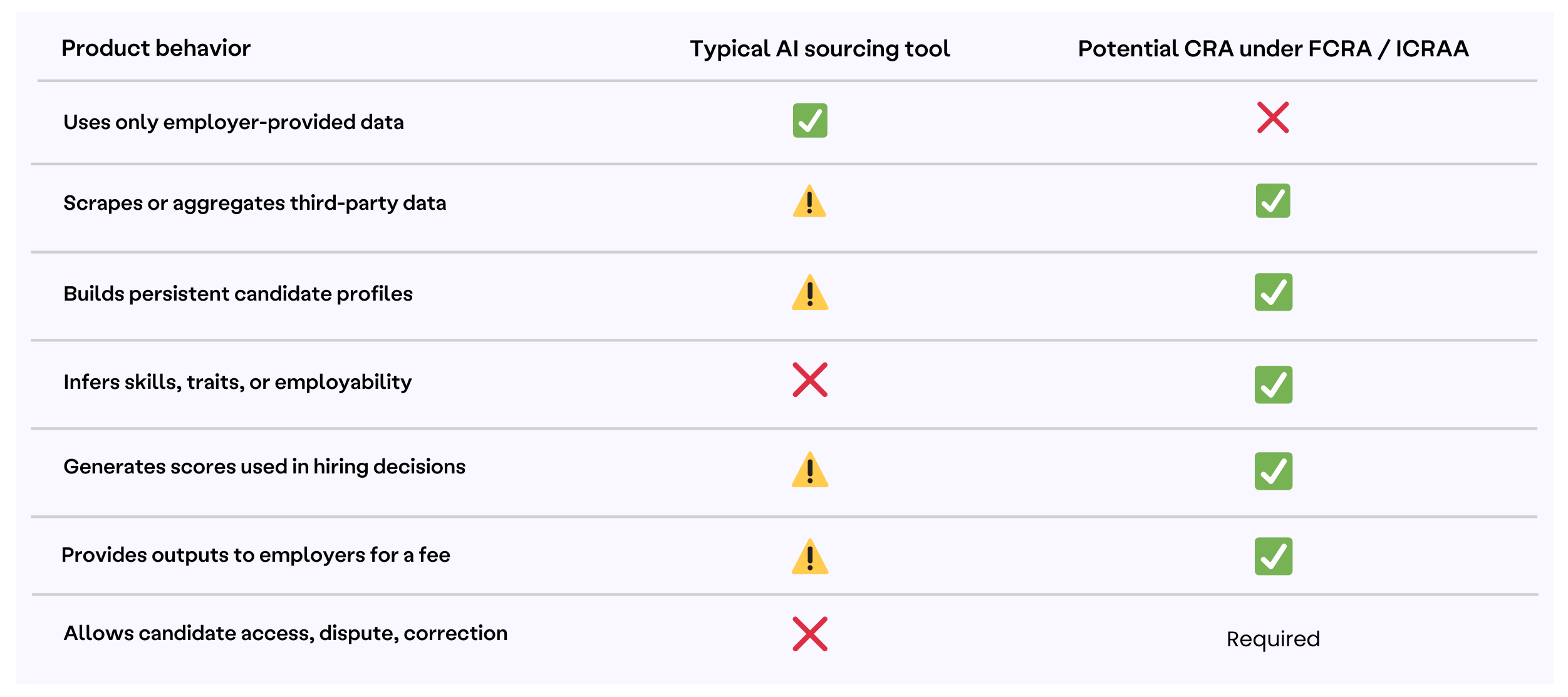

The central allegation is that by scraping data to build "rich talent profiles" and assigning "Match Scores," the vendor is acting as a Consumer Reporting Agency (CRA).

This triggers compliance obligations under two specific statutes: the federal Fair Credit Reporting Act (FCRA) and California’s Investigative Consumer Reporting Agencies Act (ICRAA).

To understand the implications for employers, it is necessary to understand the definitions within these statutes and how regulators are applying them to AI.

Enacted in 1970, the FCRA regulates the collection and use of consumer information. While often associated with credit checks, it strictly governs reports used for "Employment Purposes" (hiring, promotion, retention).

The Definition of a CRA: Under the FCRA, any entity that "assembles" or "evaluates" consumer information to furnish reports to third parties for a fee is a CRA.

The "Algorithmic Score" Clarification: In 2024, the Consumer Financial Protection Bureau (CFPB) issued Circular 2024-06, explicitly addressing "Background Dossiers and Algorithmic Scores." The Circular clarified that:

"A company... could meet this standard [of a CRA]... if the entity collects consumer data in order to train an algorithm that produces scores or other assessments about workers for employers."

This does not mean that all AI-driven sourcing or scoring tools automatically qualify as consumer reporting, but it does mean the boundary is no longer theoretical.

The lawsuit also cites the Investigative Consumer Reporting Agencies Act (ICRAA). This California statute is broader than the FCRA and specifically regulates "Investigative Consumer Reports."

What is an Investigative Consumer Report? Unlike a standard credit report, an investigative report contains information on a consumer’s:

Key Differences for Employers:The ICRAA imposes stricter requirements on employers (users) and vendors than the federal standard.

A common defense in the "Talent Intelligence" sector is that scraping data from public sources (e.g., LinkedIn, GitHub) is exempt from these laws. However, precedent suggests otherwise.

In the 2017 settlement of Halvorson v. TalentBin, the court addressed allegations that aggregating public social media data for recruitment constituted a consumer report. The resulting $1.15 million settlement did not just impose a fine; it forced the vendor to fundamentally change its product to comply with FCRA standards.

The Eightfold complaint builds on this, alleging that "inferring" skills or "evaluating" a candidate based on public data falls under the definition of "assembling" a report on a consumer’s character or reputation.

If an AI tool is deemed a Consumer Reporting Agency under FCRA or ICRAA, the burden of compliance falls heavily on the employer using the tool.

Under FCRA & ICRAA, employers generally must:

The Critical Question for Leaders: Organizations utilizing "sourcing" or "enrichment" tools should consider auditing their vendors. If a regulator asked you today whether this tool constitutes a "consumer report" under CFPB Circular 2024-06, could you answer confidently?

Warden AI provides independent bias auditing for HR AI systems. This post is for informational purposes only and does not constitute legal advice.