Tenzo maps its AI interviewing platform to California’s FEHA requirements, including monthly bias testing across 12 protected classes, human oversight, and documentation.

Tenzo removes the busy work of talent teams (sourcing, outreach, screening, and scheduling) so that humans can focus on the final judgement calls and relationship-building.

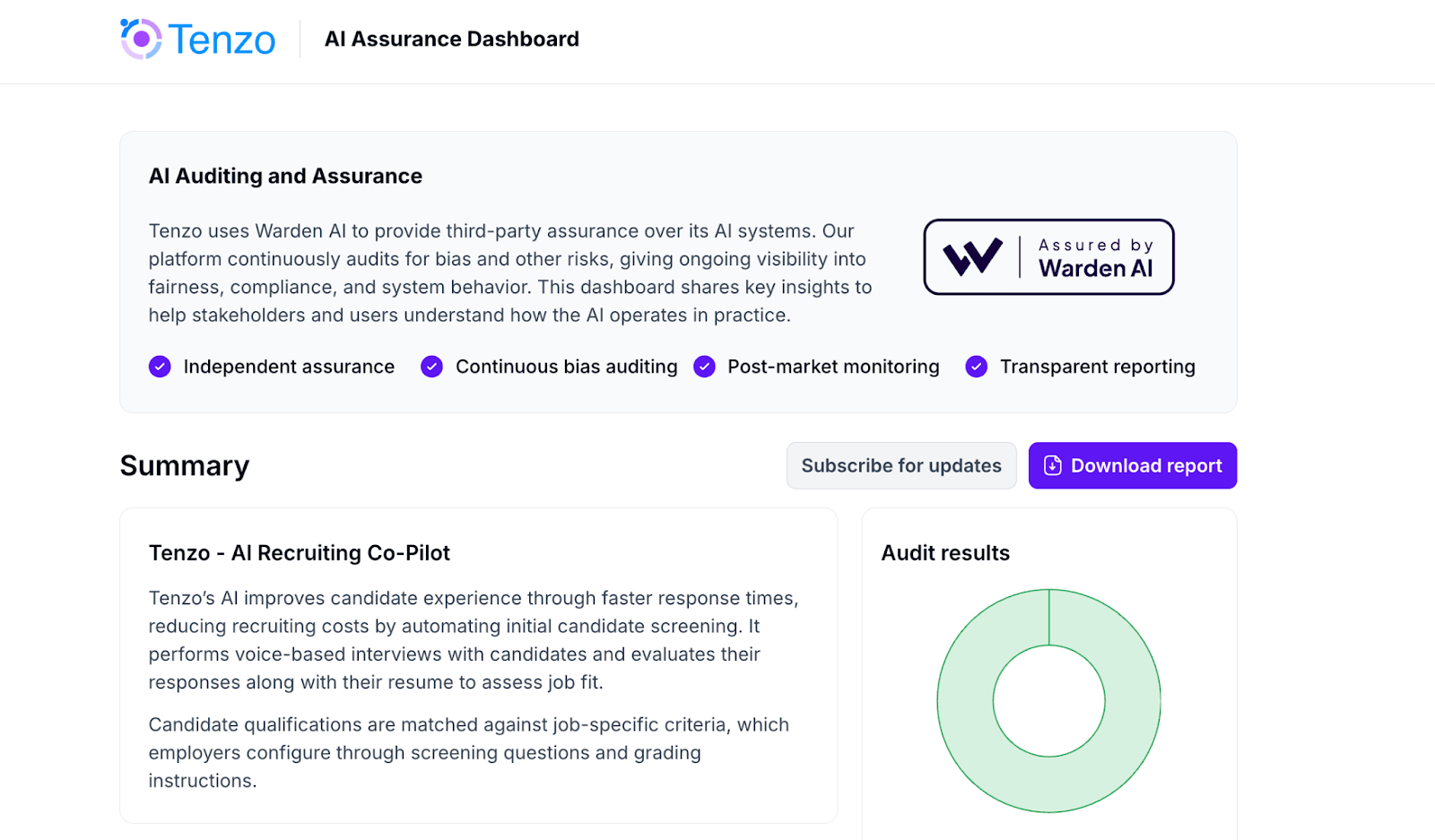

The AI interviewing platform performs voice-based interviews with candidates and subsequently evaluates their responses along with their resume to assess job fit.

Candidate qualifications are matched against job-specific criteria, which employers configure through screening questions and grading instructions.

Customers are aware of the risk of systems potentially exacerbating bias, and Tenzo sees an opportunity to alleviate buyer concerns through adopting best practices in bias auditing.

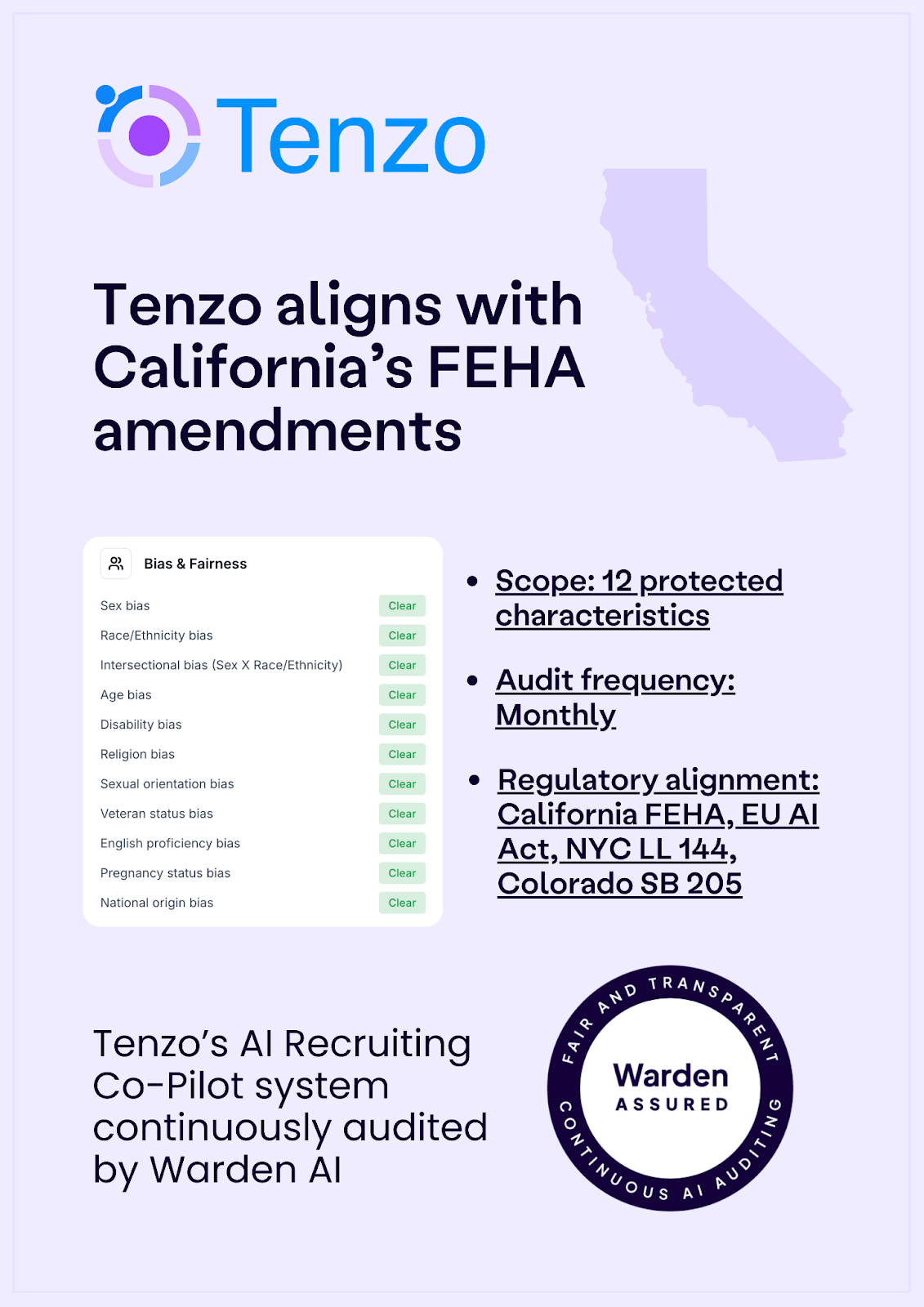

California’s FEHA amendments apply to both employers and vendors, whose technology is influencing employment decisions.

As Tenzo operates in the enterprise space, ensuring the product is aligned with California’s FEHA requirements is a non-negotiable.

We play in the enterprise space, so every customer we talk to, trust and bias are a concern.

California FEHA’s amendments apply broadly to automated decision making systems that are used in employment, including AI interviewing and co-pilot systems like Tenzo.

While FEHA does not prescribe a single technical standard, the law demonstrates clear expectations around employment tools being non-discriminatory across a wide range of protected classes.

Tenzo maps to the following California FEHA expectations around AI used in employment:

Under California FEHA, vendors and employers may be held liable for intentional discrimination and neutral practices that have a disparate impact on any of the protected classes previously mentioned.

Warden’s dual testing techniques (disparate impact analyses and counterfactual analyses) helps Tenzo map to FEHA by conducting robust testing and mitigation measures before deployment occurs.

Warden audits Tenzo’s AI Recruiting Co-pilot across 12 protected attributes and proxies, including sex, race, intersectionality, age, disability, religion, sexual orientation, veteran status, English proficiency, pregnancy status, and national origin. Check out the full list of protected classes here.

Tenzo also implements objective score cards, protected class removal layers, and human oversight so that the product is not hiring or making decisions by any non-objective standard.

Read more about how Tenzo recommends hiring based on skills, and not people or résumés.

Monthly bias audits and continuous testing enables Tenzo to catch potential bias early before material model updates or feature launches.

Although monthly bias testing isn’t explicitly required by California regulators, if bias occurs and the system isn't being monitored, defense can weaken significantly.

Tenzo keeps a log of each audit for the minimum requirement of four years or longer as requested by customers.

Tenzo selected Warden AI because they deeply understand bias in the HR tech and recruiting space.

Tenzo was looking for a comprehensive solution that would involve FEHA level coverage.

We chose Warden because we wanted monthly bias audits to show our seriousness to companies and clients and to ensure that any changes to our model with how quickly we're developing.

The team at Tenzo have some exciting new agents coming out that further eliminate recruiter time spent on non-essential activities.

They are also deepening integrations with ATS’s to make Tenzo’s agents even more seamless teammates for recruiters.

Learn more about what California FEHA means for vendors, here.