.png)

Discover how Mega HR chose to stress-test their AI system at an important stage of product development.

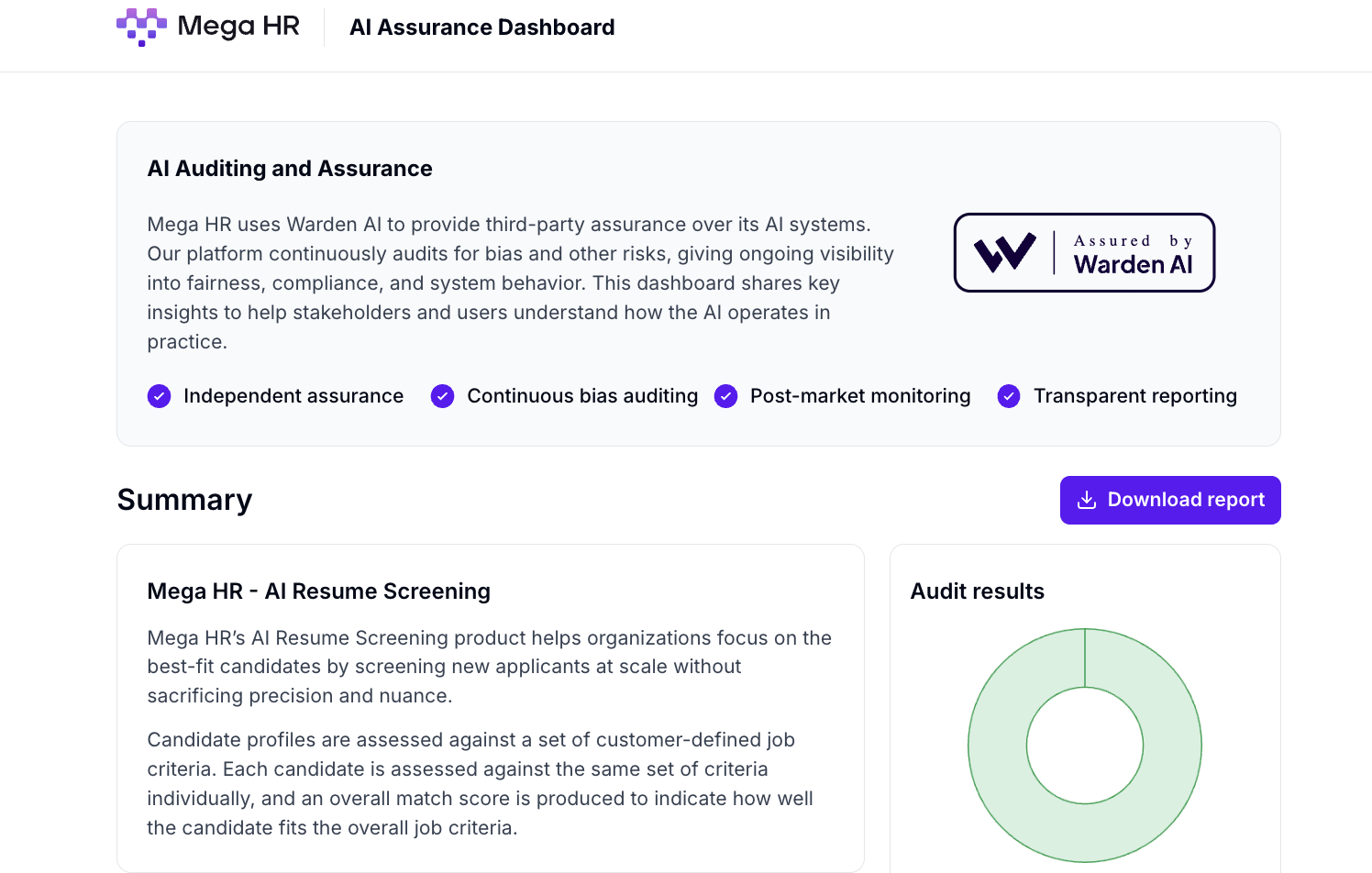

Mega HR is developing an advanced AI hiring partner designed to elevate hiring quality and continuously improve talent strategy. Mega HR’s AI assists with screening, ranking, and surfacing candidates based on predefined competencies and requirements.

Their mission is to help employers make smarter, and fairer hiring decisions through AI-driven talent evaluation tools that prioritize transparency, consistency, and measurable job relevance above all else.

Mega HR chooses to undergo independent, ongoing bias testing under the Warden Assured methodology.

During the early stages of Mega HR’s product, Warden tested their AI-driven resume screening system.

Their system helps organizations focus on the best-fit candidates by screening new applicants at scale without sacrificing precision and nuance.

Candidate profiles are assessed against a set of customer-defined job criteria. Each candidate is assessed against the same set of criteria individually, and an overall match score is produced to indicate how well the candidate fits the overall job criteria.

During early development, Mega HR engaged in independent bias testing to evaluate the system for three reasons:

Rather than waiting for customer audits or regulatory scrutiny, Mega HR proactively sought Warden to test its system in a controlled, pre-release environment.

Mega HR’s AI resume screening system was evaluated using Warden’s purpose-built test dataset. Multiple bias-detection techniques were applied to evaluate the system’s behavior on this dataset at the time of testing.

The pre-launch testing evaluated whether the system’s outputs showed evidence for potential bias across across protected characteristics.

The process included:

Testing was conducted under simulated hiring conditions designed to reflect real-world use cases.

All findings were documented to create an audit trail that could support future regulatory or enterprise review.

During counterfactual testing, Warden’s analysis produced results falling below average thresholds for certain protected groups. As with most pre-release tests, these findings were not unexpected and provided important insight into areas for refinement before becoming Warden Assured.

The findings were handled through Warden’s continuous assurance approach: audit, results, remediate any issues, and repeat.

Bias evaluation is rarely linear. Systems evolve, inputs change, prompts are refined, and model behaviour can shift over time.

In cases where remediation is necessary, becoming Warden Assured is issued only after recommendations have been followed and a re-audit has taken place.

The Warden approach operates as an ongoing loop of testing, fixing, and monitoring until the system meets the standard.

Mega HR collaborated with Warden’s technical team to investigate the root cause.

To strengthen its safeguards, Mega HR:

By quickly identifying and resolving a potential imbalance before launch, Mega HR reinforced it’s commitment to safe and transparent AI.

Rather than waiting for customer scrutiny or regulatory pressure, the team chose to stress-test the system at an an important stage of their product development.

The outcome was a stronger assurance process with greater transparency and insight into model behavior.

Mega HR are also compliant with NYC LL 144.

Check out the Mega HR AI Assurance Dashboard here.

Read more about Mega HR's commitment to fairness.