Harper vs. SiriusXM shows AI bias in hiring is already a legal risk. Both vendors and employers must show their work, or face the courts.

SiriusXM is the latest employer to face an AI hiring bias lawsuit, as plaintiff, Arshon Harper, claims the company’s use of AI in hiring unlawfully discriminated against Black applicants.

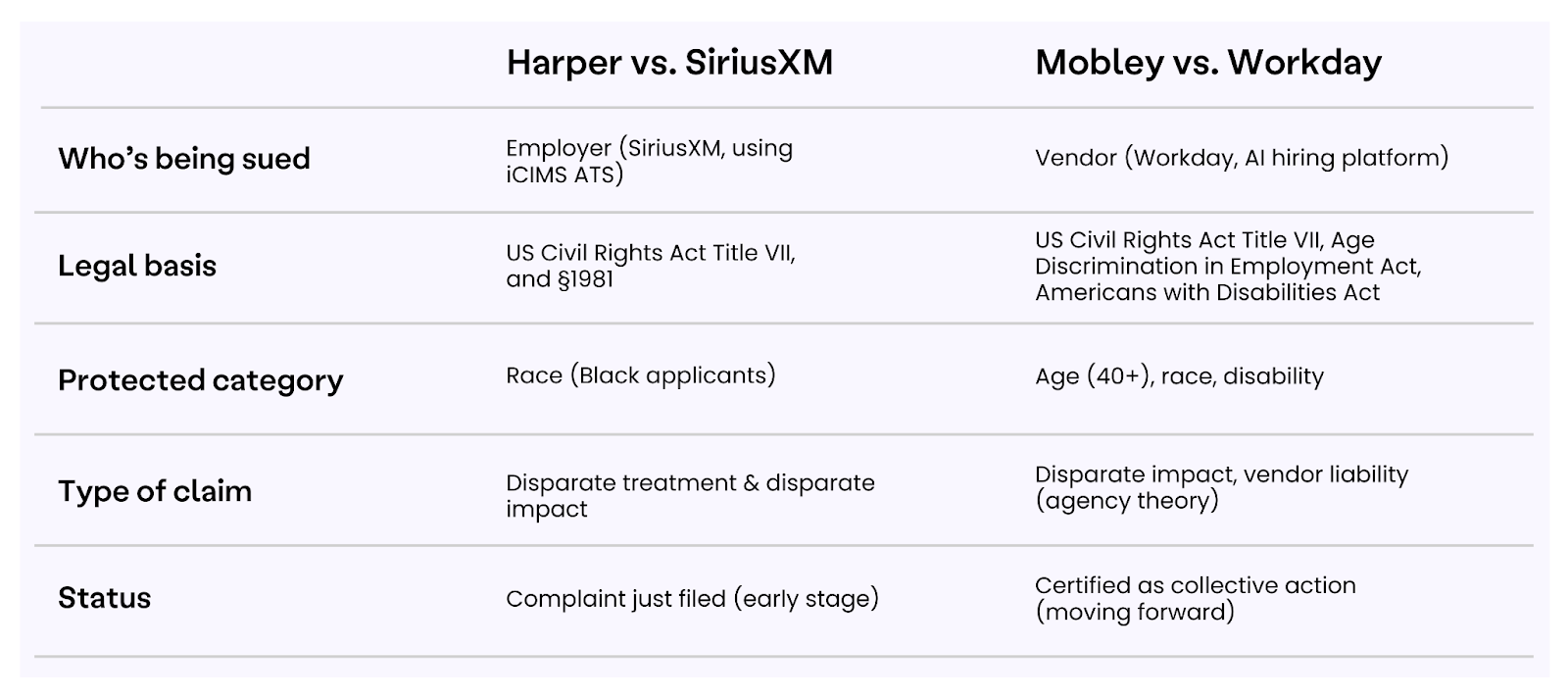

Here are the case facts:

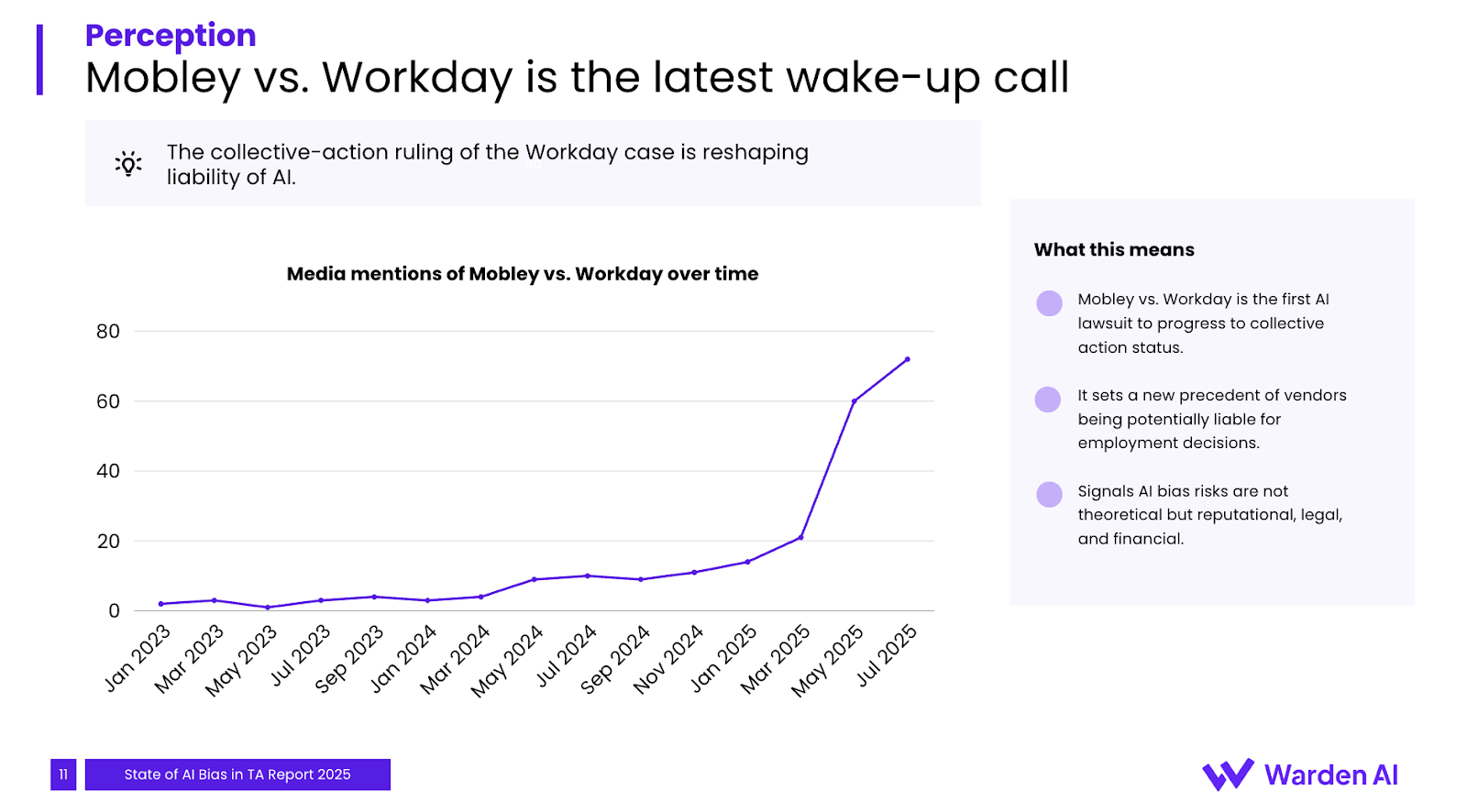

This lawsuit arrives on the heels of Mobley vs. Workday, where the court allowed a collective action to proceed against an AI vendor.

This case shows the importance of both vendors and employers showing their work.

The difference between these cases is significant.

In Mobley, the spotlight was on the vendor, Workday, and whether an AI provider can be held liable for discrimination under agency theory.

In Harper, the defendant is the employer, SiriusXM.

Together, these cases highlight a shared reality. Both builders and users of AI hiring tools carry legal risk.

Liability doesn’t stop at the vendor. It extends to the organizations that deploy these systems in real hiring decisions.

Importantly, neither case hinges on newly passed AI regulations.

Both rely on long-standing US civil rights laws, (Title VII, §1981, the Age Discrimination in Employment Act, the Americans with Disabilities Act) this time just applied to new technology.

This makes the legal risk immediate, not hypothetical or “down the line” when future AI regulation kicks in. Legal risk and reputational damage is already here.

The Harper vs. SiriusXM lawsuit doesn’t come out of nowhere, instead it reflects the very risks we tracked in our State of AI Bias in Talent Acquisition Report.

Our report data showed:

In short, lawsuits like Harper and Mobley aren’t isolated events.

They’re part of a larger shift where existing civil rights law is being applied to AI in hiring, and both vendors and employers are squarely in the spotlight.

AI bias will continue to dominate headlines. But the deeper issue is defensibility.

In a world where allegations can be filed in days; the real challenge is whether companies can produce hard evidence showing how an AI system works, what decisions it impacted, and whether adverse impact occurred.

Without that audit trail, vendors and employers alike are left exposed.

AI in hiring isn’t a “compliance problem” waiting for future laws to mature.

It’s a defensibility problem unfolding now, under long-standing civil rights law.

Those who can’t show their work won’t just face compliance challenges, they’ll face the courts.